Computer vision-based manipulation

This page is a new description of the computer-vision-based manipulation project. The older description can be found here.

Contributors

Anirudh Aatresh, Christopher Nesler, Joshua Roney.Summary

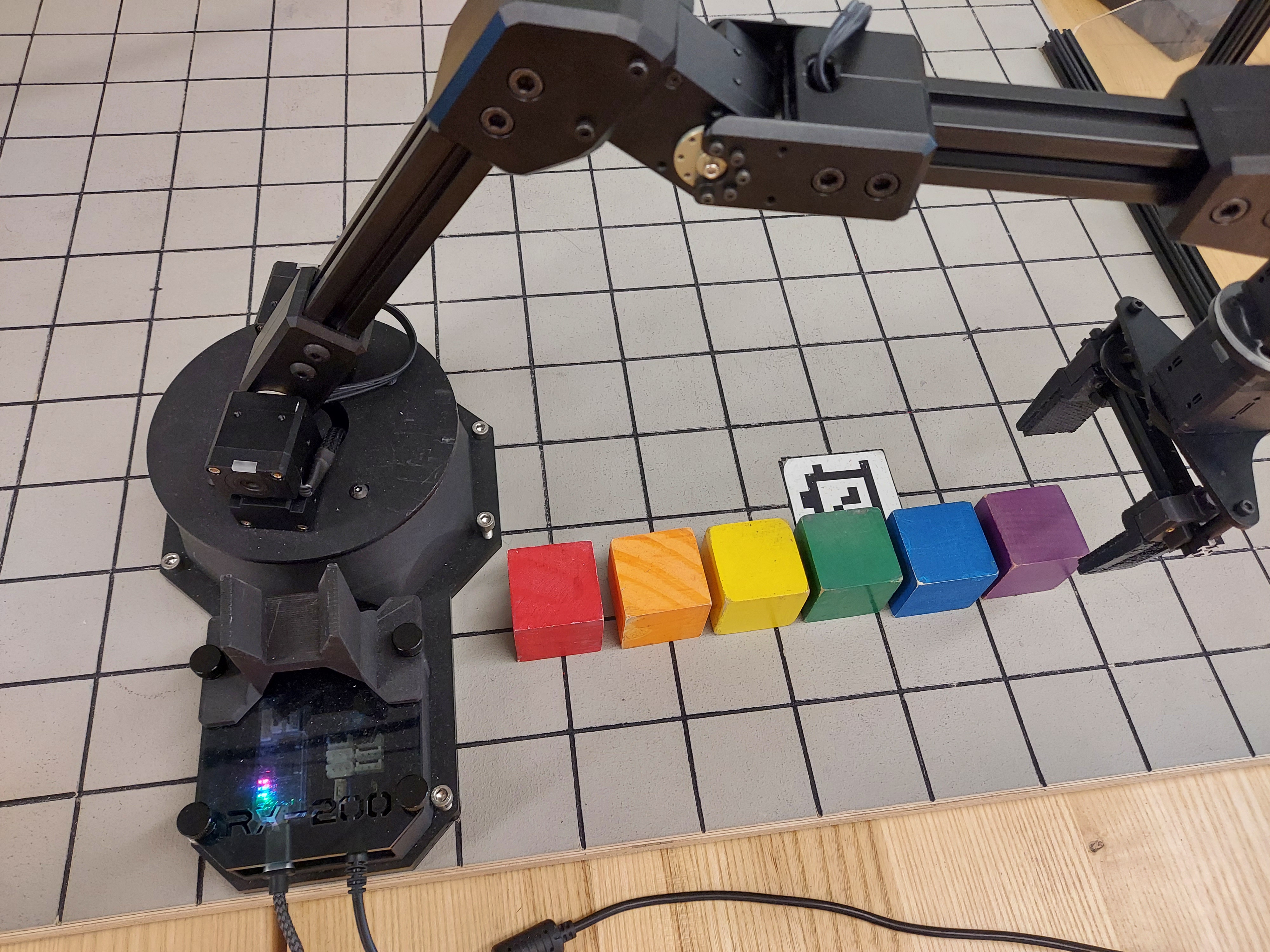

As part of ArmLab in the ROB 550 course, my team and I worked towards building a robust manipulation system that uses a 6 DOF robotic arm and an Intel RealSense RGBD camera to perform pick-and-place tasks on colored blocks.

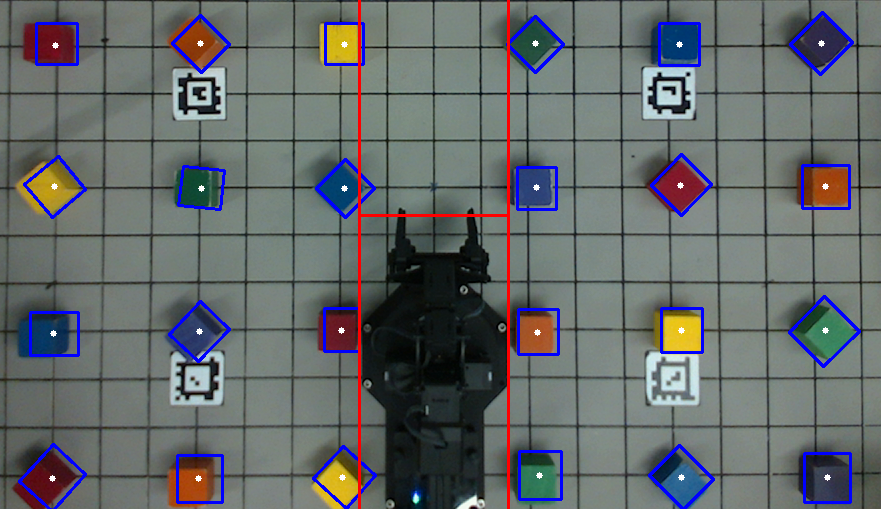

We implemented the forward and inverse kinematics for the robotic arm in Python, which allowed the robotic arm to move to any location in the valid workspace and move between configurations linearly. The RBGD camera gave RGB images along with a depth component calculated by a LIDAR sensor in the camera. After obtaining raw image and depth data from the camera using ROS, we further processed it using a block identification and pose estimation algorithm implemented using the OpenCV library in Python. This identification allowed our algorithm to find information about all valid blocks in the workspace, consisting of their color, size, location, and orientation in the workspace. This vision-based algorithm allowed the manipulator to correctly grasp a block the algorithm is interested in.

This system was used to perform autonomous pick-and-place tasks such as sorting and stacking of objects of interest identified using the vision system.

Useful links

Gallery