SLAM-based Autonomous Mobile Robot

This page is a new description of the SLAM-based autonomous mobile robot project. The older description can be found here.

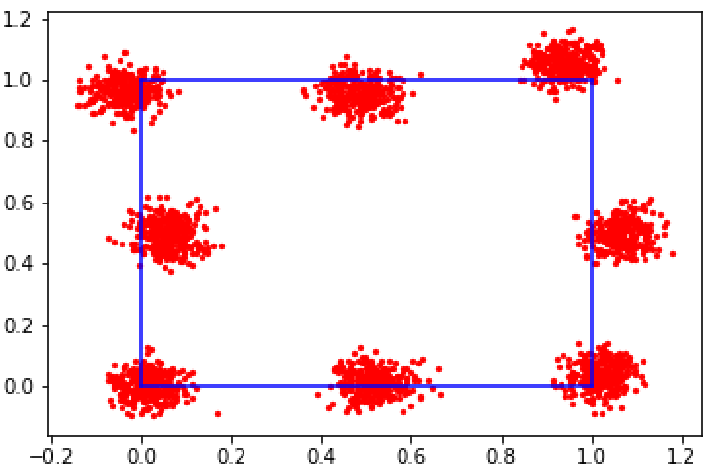

Monte-Carlo localization in a maze-like environment.

Contributors

Anirudh Aatresh, Devansh Agrawal, Shreya Phirke.Summary

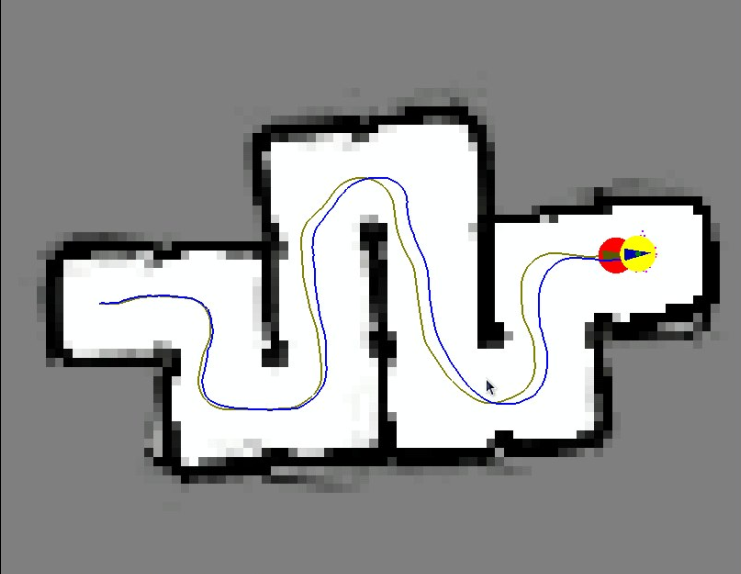

As part of BotLab in the ROB 550 course, my team and I worked towards building a robust SLAM and planning system for a 2 DOF autonomous mobile robot to navigate and explore maze-like environments.

The mobile robot we were working with was equipped with an RP-LIDAR, 3-axis IMU, wheel encoders, Beagle Bone Blue board, and Raspberry Pi 4. The RP-LIDAR was capable of collecting range measurements 360° around the robot. The Beagle Bone Blue board was used to perform low-level control, interface with the wheel encoders and IMU, and perform velocity control using PID. The Raspberry Pi 4 was used to achieve higher level position control, also using PID. This board was also used to interface with the Beagle Bone Blue board and the RP-LIDAR to perform SLAM using Monte Carlo localization and A* path planning.

The RP-LIDAR was used to map the environment and build a map of its environment using log odds to fill an occupancy grid. The robot's pose was localized in this map through SLAM, which used a particle-filter-based approach (Monte Carlo localization). The resulting map and localized pose were then used to perform path planning using the A* algorithm, allowing the robot to move from one cell to another in the grid. This planning algorithm gave the robot autonomous navigation capabilities. Furthermore, a frontier exploration algorithm was implemented, allowing the robot to explore environments autonomously.

Useful links

Gallery